Before you read this article, there are a few points you need to pay attention to:

- This article is a personal record. I am just an ordinary movie enthusiast without professional film or anime review experience. The evaluation in this article is highly subjective. If possible, please do not be influenced by my personal opinions and maintain your own views on the movie.

- Although this article tries to avoid spoilers, it is impossible. Before reading, make sure you have at least watched the trailer for Sparrow's Door Lock (Bell Sprout Journey) or have read the novel/watched the movie.

- My literary literacy is very low. I have never liked reading Chinese-related stuff since I was young (well, actually, I quite like it, but I just don't read it 0v0). Therefore, this article may not have elaborate language or fancy expressions. Your understanding is appreciated.

- Due to the above point, expecting to gain insightful content from this article is not very realistic. I welcome all kinds of communication, suggestions, criticisms, and even attacks.

I should have taken many photos like the girl next to me during the movie to keep them as memories and as a basis for analysis. These photos would have added color to the article.

However, for the sake of immersion and identification during the first viewing, perhaps this can be saved for next time.

Viewing Conditions

Chose to watch the first screening of IMAX2D on 23/03/24 at the Golden Harvest Cinema (Grand IMAX Laser Store). The theater features the IMAX Commercial Laser 12.1 system with a 297-square-meter screen, which was quite enjoyable, not to mention the impressive IMAX sound quality. I chose the seat in the 8th row, 15th seat, which was in the middle. Although I felt the 6th row might have been more immersive?

With a regular ticket price of 100 yuan (purchased for 60 yuan through a reseller on idle fish) and the 12:00 screening on Friday, these factors isolated most of the low-quality audience. Therefore, the overall viewing experience was excellent (although there was still some movement, and the couple next to me chatted a bit). There were about 30 people in the theater.

From my perspective, I would recommend watching this movie at a Dolby Cinema because the Dolby Atmos in Dolby Cinema does enhance the viewing experience of this film. Even though adjustments were made for IMAX2D, the enhancement in viewing experience falls short compared to the increase in ticket price. Of course, this is the ideal choice. You can choose your viewing location based on practical considerations such as distance, ticket price, service, or simply believe that people who commute four hours round trip to watch a movie are crazy.

Most people would think it's not worth spending double the time and money for a marginal improvement in the viewing experience or because they don't have the time or energy to understand it. For movies I like, I prefer my first viewing experience to be close to perfect, even if it requires a lot of time and money. I feel lucky that I don't have too many movies I want to watch xD

Film Review

I will comment on the following points one by one, which will also help me organize my thoughts.

Plot

For me, the plot of this work is not outstanding, and the story is very easy to understand. Therefore, let's try to avoid discussing the plot details and focus only on aspects like plot logic, pacing, and emotions.

The plot has some logical flaws— the whole premise is that Suzume falls in love with Souta at first sight. While this can be rationalized through the plot to some extent, there are other logic issues in the story that have an adverse effect on character development.

When I first started watching, I thought Suzume was a country bumpkin, falling head over heels for a handsome Tokyo guy at first sight. - A friend

Why does Suzume encounter so many people willing to help her? Isn't she afraid of being lured to Myanmar? - Friends, me

Well, this is just my bias. Suzume is very likable, so it's natural for her to receive help. I may have a narrow view of others based on myself.

Why did she suddenly forgive the cat? Can't she continue walking just because she sees a cute cat? - Friend

(That's true) xN

Of course, considering the limitations of the movie's length, such compromises can be deemed acceptable. However, this does not negate their impact on the viewing experience.

Apart from the logical flaws (which are important), the pace of the film is very comfortable. My emotions followed the movie throughout the viewing, and I often had moments of contemplation. While telling a somewhat sad overall story, it also included some light-hearted moments to prevent the atmosphere from becoming too heavy (comparable to the chest-tightening scenes in Your Name). In the process of advancing the main storyline, it also included elements of friendship, family affection, enriching the content while laying the groundwork for the budding romance between the main characters.

When I saw them (forgot their names) giving Suzume clothes and a hat, I initially thought it would lead to a plot where they gain many things during the journey and use those to achieve a specific goal or accomplish something. But it didn't happen (which would have been somewhat cliché)— these two characters had no role in the subsequent plot. I think the second subplot was unnecessary. It neither propelled the plot nor had a significant impact on character development; it felt repetitive and dragged on. In my opinion, using this part of the content to outline some background or provide more emotional depth would have been a better choice.

Let's discuss what the plot intends to convey. I am not a survivor of the 2008 Wenchuan earthquake or the 2011 Japan earthquake, and while my hometown is prone to geological disasters, I have not experienced any severe calamities firsthand. Earthquakes have been part of casual conversations and occasional memories for me.

As part of a disaster trilogy, the portrayal of disasters in this movie is commendable, but Makoto Shinkai does not want it to stop there. The juxtaposition of the "ruins" imagery against the imagined or transmitted words and laughter of the people who once lived there is intricate. The reasons for a place becoming ruins are varied— severely affected villages due to earthquakes, abandoned amusement parks due to financial struggles... Whatever the reason, ruins no longer hold new memories for people, but the imprints of the people who once lived there remain in the ruins. They do not dissipate with people's changes or the passing of life. When the changing people revisit, when the descendants of the survivors return, facing the ruins, what would they see and think?

As for the ending plot, I won't reveal spoilers, but I must say it was unexpected yet reasonable, giving a sense of realization and serving as a fitting conclusion.

Well, after giving it some thought, I have to say it, or else it won't be complete.

Spoiler Alert, Including the Main Theme of the Entire Movie

The "mother" at the beginning of the movie turns out to be the future self of Suzume, who gives her hope and helps her realize that the most cherished things—hope and care—have always been around her. When Suzume, who lost her mother, is in despair, there are countless people—Aunt Rin and the kind people she encounters on her life's journey—who are willing to extend a helping hand and offer her care and hope.

So, in essence, the translation of *Bell Sprout Journey* is quite fitting; the entire story is the journey of reconciliation that Suzume embarks upon with herself. As for the original title, *House Lock*, the closing words of Suzume saying "I'll go" after closing the door are proof that she has closed the door to her trauma and is stepping towards a new life.

Additionally, this is a kind of solace for people who have experienced disasters. Like the young Suzume, survivors may have experienced the pain of losing family and may fall into despair. But no matter what, there is always hope and love from others in life ahead. Just as Suzume shows her childhood self: "I am Suzume's tomorrow!"

The concepts of "Tokiko," "Sadaijin," "Choking Stone," "Otafuku," and others, due to my lack of knowledge about Japanese mythology, I am not in a position to comment, so please forgive me.

The reason why the stool has three legs was deliberately left ambiguous in the movie. However, based on the reviews from global internet users that I've seen, a widely accepted view is that a three-legged stool symbolizes something permanently lost in the hearts of those affected by disaster, which time can never heal.

Personal Rating: 8/10

Artwork

There is no need to say much about Makoto Shinkai's art style. Before the premiere (because of the promotion), I had already seen many comments comparing it to the Deep Sea art style. Coincidentally, I also watched Deep Sea, and its ink-and-wash art style is truly stunning. In one sentence, I can only say: it may not surpass Deep Sea, but it's something I love.

Makoto Shinkai's landscape paintings have always been praised, and this work maintained and even enhanced that quality. The scenes of the earthquake disaster and Takashi in particular were truly breathtaking for me (especially on the big screen...).

At this moment, I really regret not taking more pictures, even if my phone's camera quality is not even up to a thesis defense standard.

Here are some screenshots I took during the viewing that left a lasting impression on me

Furthermore, the artwork of those scenes was meticulous, each related to several major earthquakes in Japan's history and many symbols with special meanings (like that boat). For further details, please refer to other articles.

However, Suzume's tiny eyes in the distance were genuinely hilarious on the big screen (

Personal Rating: 9/10

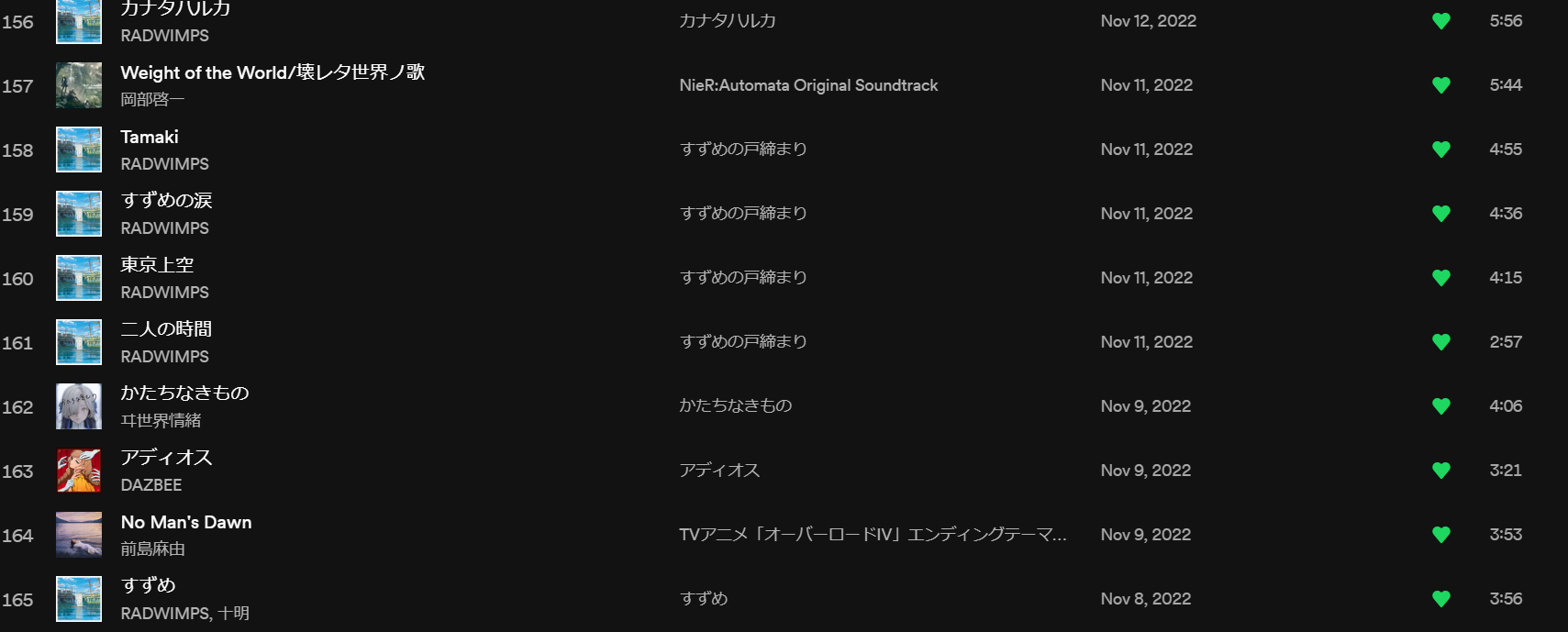

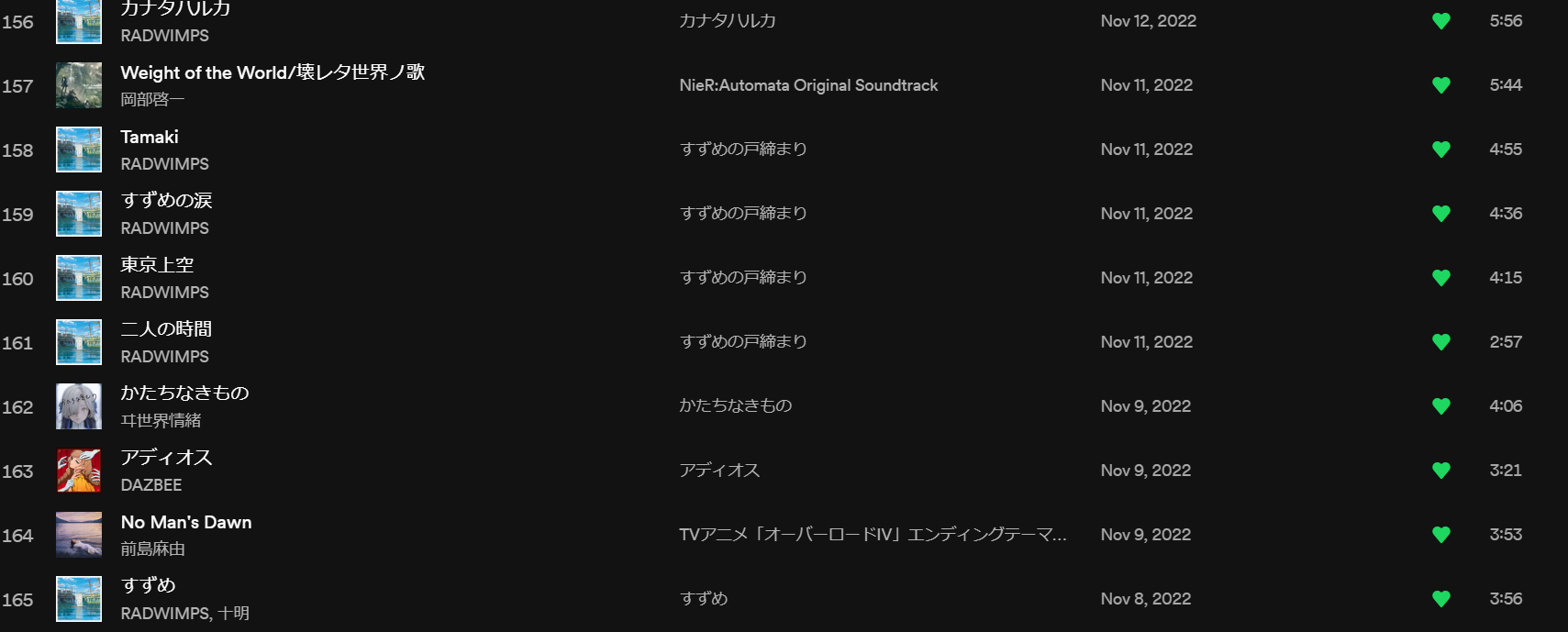

Music

Phenomenal. The music undoubtedly elevated the viewing experience of this film. While I find it challenging to describe it with more professional language, I'll just go ahead and start raving about it.

Although I listened to the entire OST as soon as it was released and fell in love with several tracks that are still on repeat, I must say, the songs' alignment with the visuals in the movie was just too cool.

When the BGM Tokyo Skies accompanied the scene of the expanding earthquake disaster, I was in a state of SHOCK. My mind went blank, maybe I was already in a "WOC" state where I had no memory, that scene is likely to linger in my mind for a while.

Truth be told, many of Makoto Shinkai's shots in this movie were not outstanding, but combined with the music, they blended perfectly. Is there not some truth to the statement that it's truly a Shinkai x RADWIMPS collaboration? This duo's performances in Your Name and Weathering with You were already breathtaking, but this time, working with Jinnai Kazuma (sorry for my limited exposure) truly delivered a knockout punch. Others like Night Ferry also struck a chord; emotions surged as soon as the familiar BGM played in the movie.

Lastly, the theme song. Suzume feat. Tomoaki is divine. Where did RADWIMPS find this singer? The melody they incorporate, present in tracks like Determination-Departure, is present in various OSTs; every time it played in the movie, my heart skipped a beat, and it seems it will resonate for a while.

The main theme by Yojiro Noda, Kanata Haruka, at the end was indeed powerful. The sister sitting next to me had her emotions on high alert; as soon as the song played, she broke down in tears, making me feel like shedding manly tears too.

I have been listening to Sparkles from Your Name for 7 years, I wonder how long I can listen to this OST?

Personal Rating: 10/10

Ramblings

Indeed, a good film should be watched alone.

However, if anyone is willing to accompany me for a second viewing, I'd be more than happy to join.

References

https://eiga.com/movie/96308/review

https://zhihu.com

This Content is generated by LLM and might be wrong / incomplete, refer to Chinese version if you find something wrong.